While watching the WWDC21 keynote a few weeks ago, I started to notice a recurring theme. And that theme is that several of the upcoming iOS 15 features seem to be taking advantage of the iPhone’s Neural Engine.

The Neural Engine has been in the iPhone since the release of the iPhone X in 2017. However, I don’t think most of us have felt the effects of this processor at all over the four years since its initial release.

WWDC21 kept giving me this feeling that iOS 15 is the first futuristic iOS update in a long time. And, though there isn’t an official confirmation from Apple on this, I believe that’s because Apple is finally taking full advantage of the Neural Engine.

In this post, I’ll briefly cover what the Neural Engine is, then I’ll dive into all of the new iOS 15 features that seem to be using this processor to its full potential. Near the end, I’ll cover the main ways that iOS currently uses the Neural Engine, so that you can compare it to the upcoming iOS 15 release.

Alright, let’s get into it!

Contents

- What is the Neural Engine on iPhone?

- How iOS 15 features are starting to take advantage of the neural engine

- Your iPhone can read text in your photos

- iOS 15 features will allow your iPhone to recognize and identify content in your photos

- Memories in Photos are going to be more sophisticated and “real” feeling

- Notifications, Widgets, and Do Not Disturb are getting smarter

- New iOS 15 features will make it easier to get directions with your camera

- Siri will do more processing on your device

- What can the Neural Engine do without the upcoming iOS 15 features?

- The upcoming iOS 15 features point towards a smarter future for iPhone

What is the Neural Engine on iPhone?

The Neural Engine is a processor on iPhone X and later that handles machine learning operations. It’s the ability of this processor to power machine learning that makes it so important, so understanding machine learning is important to understanding this processor.

Machine learning refers to software that makes decisions about what code to execute independently of a programmer.

Typically, software needs to work with very specific and controlled data. That’s why Siri will understand your intent with one phrase but fail to understand it with another – even if the intent in each phrase is identical. Siri needs the data to come in a specific structure, or it fails.

Machine learning is a way of changing that. It allows software to take in less structured data and still process it. If you ever find yourself surprised that Siri understood an oddly phrased request, it’s probably because Siri’s machine learning capabilities have improved.

The Neural Engine is, as the name implies, the engine on your phone that makes this possible.

I realize these concepts can be a bit hard to understand, and I know that I might not be doing the best job at explaining them. I’m trying to keep things short and simple for this post, but if you want a deeper dive, you should check out this post!

How iOS 15 features are starting to take advantage of the neural engine

The issue with the iPhone’s Neural Engine isn’t that it isn’t powerful, but that its power isn’t utilized to its full extent on the iPhone. For the last four years, it’s been put to work in limited instances, like augmented reality. It’s helpful, but it hasn’t revolutionized the iPhone yet.

I think several iOS 15 features point to a more revolutionary future for the Neural Engine. I don’t think any of the new features I’m about to break down below would be possible without this processor. So let’s see how these features use the Neural Engine to its fullest.

Your iPhone can read text in your photos

The first of the iOS 15 features that screamed “Machine Learning!” to me was Live Text. Live Text is a feature in iOS 15 that enables your iPhone to read text in your Photos app.

That means you can take a picture of anything with printed text on it, open that photo in the Photos app, and you’ll be able to interact with that text. You can tap on phone numbers in photos, copy and paste text from pictures of documents, and use the search bar in Photos to search for text in photos.

This feature also allows your iPhone to read handwriting in your photos. It works with the Camera app, too, so you can interact with text in your iPhone camera’s viewfinder in real-time.

Without machine learning, a feature like this would be nearly impossible. It’s why those online security tests use warped text to prove that you aren’t a robot. Because it’s challenging for a program to be able to read text.

Not only is a smartphone feature that can read text regardless of font, style, color, or angle, in real-time extremely useful, it’s also an excellent use of mobile machine learning.

iOS 15 features will allow your iPhone to recognize and identify content in your photos

Another of the new iOS 15 features that uses the Neural Engine is object recognition in Photos. This feature works similarly to Live Text, except that it recognizes objects rather than text. The example Apple used is that you can point your iPhone camera at a dog, and your iPhone will not only recognize that it’s a dog but also which breed of dog it is.

The iPhone has been able to pull out faces from your photos for a while, which is a form of object recognition, albeit limited. This expansion of that capability will allow your iPhone to quickly look at the unstructured data of a photo and identity objects within it.

This is more impressive (and difficult) than Live Text as the patterns the Neural Engine has to look for are going to be far less consistent. Your iPhone will need to make thousands (if not hundreds of thousands) of decisions to be able to make determinations like that.

To identify a dog breed, it’ll first need to see that a dog is a distinct object from the background, then it will need to draw boundaries around that dog, then pick out distinct characteristics of that dog until it can determine that it is a dog, then pull out even more characteristics to determine which kind of dog it is.

That kind of computing is only possible with a machine learning processor.

Memories in Photos are going to be more sophisticated and “real” feeling

Another feature in the Photos app that’s going to be getting a Neural Engine touchup is Memories.

This feature takes photos from a certain time (usually a specific day) and combines them in a short video for you to view. The idea being that Memories will create video collages of your vacations, parties, anniversaries, and more, automatically.

Memories are another of the iOS 15 features getting a significant bump in quality. It’ll automatically incorporate music from your Apple Music library into your Memories.

The music Photos chooses will not only be paced appropriately with the video, but it should match the content of the video, too. For instance, images from a party should be paired with energetic music, while more thoughtful photos should have slower music attached.

While I don’t use this feature often, the computer science behind it is extremely fascinating. It combines object and scenery recognition, groups photos based on content, location, and time period, separates average day-by-day photos from photos tied to special life events, draws a connection between the mood of those photos to the mood of a song you like, pairs them in a slideshow presentation, and times that presentation to the beat of the music.

Memories has always used the Neural Engine. And the next version of iOS is set to increase the intelligence of this feature substantially.

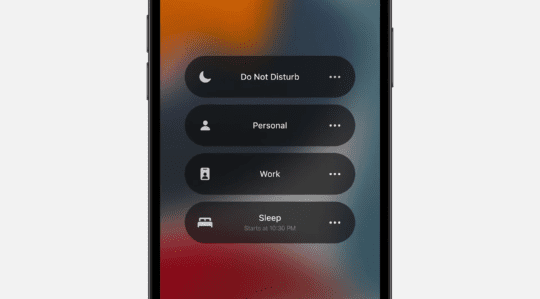

Notifications, Widgets, and Do Not Disturb are getting smarter

A grouping of iOS 15 features that are taking greater advantage of the Neural Engine are notifications, widgets, and Do Not Disturb.

Widgets started using machine learning in iOS 14, but I thought it would be helpful to add them here. Smart Stacks allow you to put widgets on top of one another. Your iPhone will then flip through them throughout the day, showing you what it thinks is the most relevant widget at any given time.

These kinds of decisions are made by analyzing your behavior at different times of day and after using certain apps. The Neural Engine takes this data, interprets it, and then displays your widgets according to this data.

New iOS 15 features are set to work very similarly.

Notifications will now be grouped in a Notification Summary, so you don’t see less important notifications crowding up your Lock Screen all day. You can customize the Notification Summary feature, or let the Neural Engine handle it for you.

Do Not Disturb is getting a new component, Focus, which will hide certain notifications based on how they’re categorized. You can manage Focus manually, or let it manage itself intelligently.

All three of these features are going to use extremely similar machine learning metrics to predict and adapt to your behavior.

New iOS 15 features will make it easier to get directions with your camera

This is one of the iOS 15 features that I haven’t heard as much excitement about as I expected. I still find it to be very interesting, especially in a machine learning context.

In Maps in iOS 15, you’ll be able to point your camera around while walking. That will allow you to see AR directions projected on your environment. Say you’re trying to get to the movies and aren’t sure which road to take. You’ll be able to point your iPhone around and see directions highlighted on the streets and buildings around you.

Augmented Reality on iPhone was made possible by the inclusion of the Neural Engine, so this feature owes its origins to machine learning. But this feature is also combining AR with image recognition and location detection to provide an incredible real-time experience.

Although it’s only going to be available in a few cities at launch, this feature points to a future where machine learning on iPhone isn’t just helping you out in the background. It’ll be able to help you achieve things instantly that are outside of your comfort zone or ability.

Siri will do more processing on your device

The last of the iOS 15 features that takes greater advantage of the Neural Engine is Siri. Siri has always used machine learning. But that learning hasn’t maxed out the potential of the Neural Engine yet.

That’s because Siri has used off-device processing. That means your iPhone listens to your request, sends it to an Apple server that processes the request, then sends it back to Siri on your iPhone to act on that request.

The reason this was done was to increase the power of Siri beyond what the iPhone could support. In iOS 15, however, this is set to change. Siri will now do the majority of its processing on your iPhone. That should make Siri faster, smarter, and more reliable.

I think this update will probably require the least assistance from the Neural Engine. Hence its placement at the end of the list. It’s worth noting, however, as it points to Siri getting smarter. A more capable assistant using the hardware on iPhone, not a far-off server.

What can the Neural Engine do without the upcoming iOS 15 features?

And that’s it! So far as I can tell, that’s all of the new ways that iOS 15 features are going to start using the Neural Engine’s raw power.

I wanted to include a brief section at the end of this post highlighting some of the things that the Neural Engine already does on your iPhone. That way, you can compare where it is today to where it’s going to be in a few months.

Hopefully, this will help you appreciate the upcoming changes to iOS in a new light and better understand how features like this come to your iPhone.

The Neural Engine helps you take better pictures

I would say that the biggest use of the Neural Engine on the iPhone since 2017 has been in photography. Every year, Apple shows off how the newest iPhone is going to be able to perform more photographic calculations than the previous generation. Apple says things like, “The image processor is making X million calculations/decisions per photo”.

This refers to the number of calculations the Neural Engine makes whenever you take a photo. It looks at the colors, lighting, contrasting elements, subject, background, and a multitude of other factors. And it determines how to best grade, adjust, absorb, and balance all of these elements in an instant.

It’s this machine learning process that has made things like Portrait Mode and Deep Fusion possible. Portrait Mode can isolate you from your background, and Deep Fusion has made night photography on iPhone substantially better than before.

The Neural Engine is critical to your iPhone’s camera because of hardware constraints. Due to the iPhone’s size, the camera and its lenses are limited in capability. It’s the computing and machine learning that happens when you take a photo that makes your iPhone photography on par with photos from a professional DSLR.

Machine learning makes FaceID fast, secure, and adaptive

Of course, we can’t talk about the Neural Engine without mentioning why it was created.

That’s right – the Neural Engine was added to the iPhone X to make FaceID possible. In case you didn’t know, FaceID is one of the most sophisticated and complex features of your iPhone.

FaceID doesn’t just compare the 2D image of your face in the selfie cam to another 2D image of your face. It looks at a 3D map of your face, tracks your eye movement, and compares it against a previous 3D scan of your face.

FaceID doesn’t just do this in less than two seconds while your face is in motion, partially obscured, and at varying angles. It also adapts to the way your face subtly changes from day to day. That’s why you can grow a beard, get older, and change in other subtle ways without needing to rescan your face.

FaceID and the Neural Engine are studying and learning from your face each time you unlock your iPhone. Without the Neural Engine, FaceID wouldn’t be half as fast, secure, or reliable as it is today.

The Neural Engine plays a key role in AR experiences on iPhone

Finally, the Neural Engine plays a key role in AR on iPhone. For those that don’t know, Augmented Reality (AR) is a feature that projects 3D models onto your environment through your iPhone camera’s viewfinder. You can test this out for yourself by using the Measure app on your iPhone.

This kind of feature combines things like image recognition, spatial awareness, and memory. All of which require the power that the Neural Engine brings to the table.

Fortunately, many of the new iOS 15 features are set to make AR on iPhone even more powerful. It’s a trend I hope continues, as I think AR has the potential to be one of the most integral features of mobile devices.

The upcoming iOS 15 features point towards a smarter future for iPhone

On the whole, I’m very excited for the future these iOS 15 features give us a glimpse at. There are several facets of the iPhone that feel a bit underutilized, but few as much so as the Neural Engine has been. I look forward to this changing, and hope you do, too!

For more news, insights, and tips on all things Apple, check out the rest of the AppleToolBox blog!

Write a Comment